Chapter 2 Correlation and Simpson’s Paradox

Consider the following conceptual graph. This is a simple causal graphical model which will be revisited and explained in detail later in Chapter 5. For now, simply interpret an arrow as a causal relationship. In Figure 2.1, \(X\) and \(Y\) share a common cause \(U\). Structures like this naturally induce a correlation between \(X\) and \(Y\).

Figure 2.1: Graphical model for mutual dependence, a.k.a. a fork.

To see that, let \(U\sim N(0,\rho^2)\) for some \(\rho<1\) and let \(\epsilon_1\) and \(\epsilon_2\) to be independent noises following \(N(0,1-\rho^2)\) and are also independent of \(U\). Let \(X = U + \epsilon_1\) and \(Y = U + \epsilon_2\). It can be shown that \(X\) and \(Y\) have bivariate normal distribution with mean 0, variance 1 and correlation \(\rho^2\).

A positive correlation between \(X\) and \(Y\) means a relatively large \(X\) is associated with a relatively large value of \(Y\). In fact, \[\begin{equation*} \mathrm{E}(Y|X = x) = \rho^2\times x. \end{equation*}\]

Does it mean increasing \(X\) has a causal effect on increasing \(Y\)? No! In the ground-truth model, \(X\) and \(Y\) are both noisy versions of the common underlying signal \(U\). By symmetry, the following is also true: \[\begin{equation*} \mathrm{E}(X|Y = y) = \rho^2\times y. \end{equation*}\]

If we consider \(X\) causes \(Y\), by the same logic \(Y\) also causes \(X\). This violates the common sense that cause and effect cannot be reversed. \(X\) and \(Y\) are statistically correlated but it does not warrant a causal relationship. This phenomenon is often quoted as

“Correlation does not imply causation.”

The above contrived example seems to suggest the distinction between correlation and causation is clear and should be apparent to careful eyes. Quite the opposite! Human nature makes it very hard to distinguish them, as illustrated by the infamous Simpson’s paradox.

Simpson’s paradox is often presented as a compelling demonstration of how easy it is to reach paradoxical conclusions when interpreting data too superficially without deeper analyses. It is one of the most known statistics puzzles. On one hand, it only requires elementary math to understand the problem. On the other hand, it has deeper psychological implication and often be interpreted as limitation of statistical methods. To resolve the paradox requires solid understanding about causal inference. Simpson’s paradox is deeply connected to the desire of causal knowledge as part of human nature and the tendency to take correlation as causation.

Edward H. Simpson first addressed this phenomenon in a technical paper in 1951, but Karl Pearson et. al. in 1899 and Udny Yule in 1903, had mentioned a similar effect earlier.1 We take the Kidney stone treatment data to illustrate Simpson’s paradox. Other well known data sets used to illustrate Simpson’s paradox include UC Berkeley gender bias data and baseball batting average data.

| Treatment A | Treatment B | |

|---|---|---|

| Small Stones | 93%(81/87) | 87%(234/270) |

| Large Stones | 73%(192/263) | 69%(55/80) |

| Both | 78%(273/350) | 83%(289/350) |

Table 2.1 shows success rate of treating kidney stones using two different treatments, denoted as A and B. The patients were further segmented by their kidney stone size. Success rates were computed for each of the 4 treatment kidney-size combinations, as well as the overall success rate for the two treatments. From the table, treatment B has a higher success rate overall, regardless of the size of the stone. However, segmented by the size of the stone, in both small and large stone cases, treatment A shows higher success rate.

How could it be true? Is treatment A better or worse than treatment B?

On the surface, Simpson’s paradox can be explained by simple arithmetics. Namely the ratio of sums \((a+b)/(c+d)\) could be ordered differently than individual ratios \(a/b\) and \(c/d\). In the kidney stone example, we see small stone cases generally have higher success rate regardless of treatment. In the data set, treatment A consists of small stone and big stone groups, with 87/(87+263)=23.1% patients have small stones. Treatment B has only 270/(270+80) = 77.1% patients with small stones. When grouped together, treatment B have higher success rate only because it contains more patients that are easier to treat.

The arithmetics is simple and the explanation seems to be straightforward. What makes Simpson’s paradox so important and profound?

Suppose as a data scientist you are presented with the kidney stone data, with only aggregated data. What conclusion will you make? Most people will conclude that treatment B is better, given that 95% confidence interval of success rate for treatment A overall is estimated to be \(78\%\pm0.1\%\), while for treatment B is \(83\%\pm0.08\%\)). However, once you are presented with the stone size segmented data, you realize you might just made a huge mistake. How could treatment B be better if treatment A is better in both small stone and large stone cases? You must correct your earlier assessment and then conclude treatment A is better.

But wait a minute, what if somebody else gives you a further segmented data that show, say, for both small stone and large stone cases, when segmented by gender, treatment B is better than treatment A for all gender? Isn’t this mathematically possible by the same arithmetics aforementioned?

We can ask this kind of questions on and on and reach to a conclusion that no conclusion can be made based on any type of data (pun intended). Now we see the real and unsettling problem. Can we learn anything from data?

The key to resolve the paradox is simple yet profound, “correlation does not imply causation!” Why do people feel this is a paradox in the first place? And what do we mean by “learn from data?” What Simpson’s paradox reveals is the hidden truth that as human beings our brains are hardwired to look for causal effect.

If the difference between kidney stone success rate is indeed caused by treatment A being more effective than treatment B for both small and large stone cases, then overall treatment A should be more effective than treatment B because a patient can only have small or big stone. In fact, we should be able to further state

\[\begin{align*} \text{Overall effect} & = \text{Effect on small stone patients}\times P(\text{patient have small stone}) \\ & + \text{Effect on large stone patients}\times P(\text{patient have large stone}). \end{align*}\]

The rationale in this argument is buried deep down in the definition of causal effect — the notion of change. When we change treatment from A to B, or vice versa, we assume the size of a patient’s kidney stone remains unchanged because it is a precondition of the treatment. Therefore the proportion of patient having small or large stones has nothing to do with our decision of which treatment to use.

But did we explicitly claim any causal relationship? No! Before we see the segmented data, all we observe is that there exists a positive correlation between choosing treatment B over A and higher treatment success rate. After the data is further segmented by stone size, the correlation is reversed. Through the arithmetic explanation, we see that the reason why treatment B is better in aggregated data is actually not because the treatment itself, but due to the higher proportion of small stone patients in treatment B. In other words, the link between the choice of treatment and success rate is not causal at all. Treatment B is either just “lucky” to have more easier-to-treat patients with smaller stones, or more likely, there is certain self-selection mechanism that leads more patients with larger stones to choosing treatment A, or more patients with smaller stones to choosing treatment B, or both, despite this dataset showing seemly a balanced design with both treatment A and treatment B each having 350 patients. Correlation does not imply causation. Correlation can happen due to many other unobserved confounder factors. In this case one confounder effect is the size of the stone. There could be more confounders. We just never know. What makes this such a puzzling paradox is the fact that correlation is not what we are looking for, causation is! Researchers collect this data set with the hope to find out which treatment is better. Our goal of searching for causal knowledge tricked everyone into unconsciously considering correlation as causation, hence the paradox!2

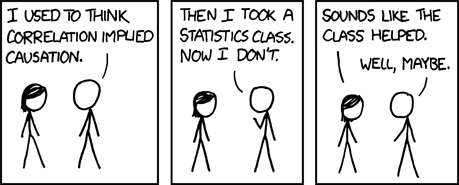

Paradox resolved! But an unnerving concern remains: Why are our brains almost hardwired to interpret correlation as causal effect? The following XKCD comic reminds us of how vulnerable we are.

Figure 2.2: Correlation(xkcd 552)

This is not a book on philosophy, but we hope some degree of philosophical discussions can help:

- As pointed out by empiricists, causality, and knowledge in general, is learned from experience. When analyzing a dataset — a form of collected experiences, we tend to treat all conclusions as causal.

- Causal relationship is much more stable and robust than correlation. As demonstrated in Simpson’s paradox, correlation relationship might be due to many other confounders, known or unknown, observed or unobserved. Correlation as knowledge is hard to transfer to a situation in future. Statisticians call this external validity. Interpreting empirical correlation between two events as causation tremendously simplifies our understanding of the world. Occam’s razor in action.

- Many psychological studies demonstrated the existence of cognitive bias in which people are often overly confident in believing their own discovery. We naturally believe our findings are reflecting a form of truth. This can be seen as a type of systematic error from inductive reasoning.

Statistics, not philosophy, should provide us with the rigorous tool to prevent us from falling into the trap of correlation. Unfortunately, correlation, not causation, is the firstborn in the household of statistics. The study of more than one outcome variables belongs to multivariate statistics and many may be more familiar with its modern day incarnations under other names such as predictive analysis/modeling and machine learning or simply AI. Given a joint probability distribution, we can predict one random variable from observations of others, by exploiting correlation structures between them. If two variables \(X\) and \(Y\) are correlated in a joint distribution, our understanding of \(X\) and \(Y\) are intertwined together. If we change our estimate of \(X\), our estimate of \(Y\) also changes. In this sense multivariate statistics and modern predictive modeling are all about correlation under certain joint probability distribution.

The definition of causation also relies on change but it is a totally different kind. In correlation, the changes are passively observed. In causation, we change through intervention directly. The difference between passive observing and intervention is critical and begs a proper treatment in statistics. In the former case, the ground-truth underlying joint distribution between random variables of interest is responsible for the change, but not part of the change. In the latter, the joint distribution itself can be changed and disturbed by the act of the intervention.

At the very core of causal inference is about how we reason and talk about intervention using appropriate statistical vocabularies. A direct method following the discussion above is to study the impact of intervention on the joint distribution and quantify the causal effect of an intervention on a focal random variable using post-intervention joint distributions. This approach was taken and led by Judea Pearl through a series of works started in 1980s culminated in a new causal graphical model with a new algebra called do calculus.3 An alternative approach is to augment the joint distribution with the idea of potential outcomes. A third approach, with a much longer history is randomization. Randomization is the most straightforward way to learn effect of intervention by really doing intervention. The next few chapters will go through all these different approaches and explain how they are connected to each other.

Simpson’s paradox is also called Yule-Simpson effect.↩︎

Simpson’s paradox also links to Savage’s Sure thing principle: “[Let f and g be any two acts], if a person prefers f to g, either knowing that the event B obtained, or knowing that the event not-B obtained, then he should prefer f to g even if he knows nothing about B.” We see that Sure thing principle also has an implicit causal setup.↩︎

Judea Pearl received Turing Award for “fundamental contributions to artificial intelligence through the development of a calculus for probabilistic and causal reasoning.”↩︎